Tabbed Document Interfaces (TDIs) have become mainstream nowadays. Did you ever stop and wonder why? Which problem has been solved by introducing them? In this post I will shortly take you through their history, explain why I feel tabs don’t solve the fundamental problem, and why Activity-Based Computing (ABC) might be a more appropriate solution.

History

Tabs aren’t as recent as most people think. They gained mainstream success once tabbed browsing was introduced (and no, Opera wasn’t the first web browser to do so), but early environments already supported them. Smalltalk (from Xerox PARC) was a programming language, but its windowed GUI is what eventually inspired windowing environments for personal computers.

Although not exactly tabs as we know them today, I found it important to show what most likely has been the inspiration for them. Since the tabs are always positioned at the top left of the window, you can imagine quickly running out of horizontal screen space if you were to outline them next to each other. In this sense you can argue these aren’t tabs at all, but just plain windows. This changed when UniPress’s Gosling Emacs moved the tab to the right hand side of the window. Since tabs take up a lot less space vertically, outlining them underneath each other doesn’t take up as much space as outlining them horizontally.

Don Hopkins presents having more than 10 windows open under HyperTIES, which is an Emacs based authoring tool. The tabs might not behave as they do today, but they solve a similar problem: allowing to have many documents open simultaneously and easily switching between them.

The problem

Having many documents open and switching between them is the task of a window manager, not specific applications. The definition of a window manager according to dictionary.com is:

A part of a window system which arranges windows on a screen. It is responsible for moving and resizing windows, and other such functions common to all applications.

When an application lets the operating system’s window manager handle all its individual windows separately, it has a single document interface (SDI). This approach quickly resulted in cluttered taskbars, which is why multiple document interfaces (MDIs) and tabbed document interfaces (TDIs) became popular. Limitations of the window manager forced applications to address problems which should have been addressed by window managers in the first place. Consequently, many applications are reinventing the wheel.

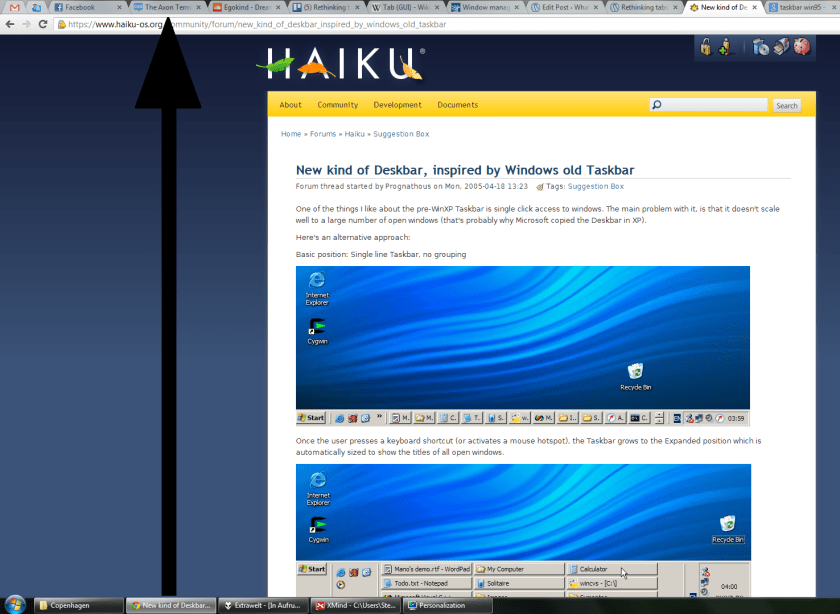

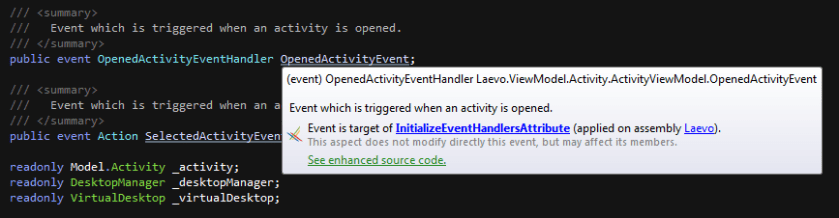

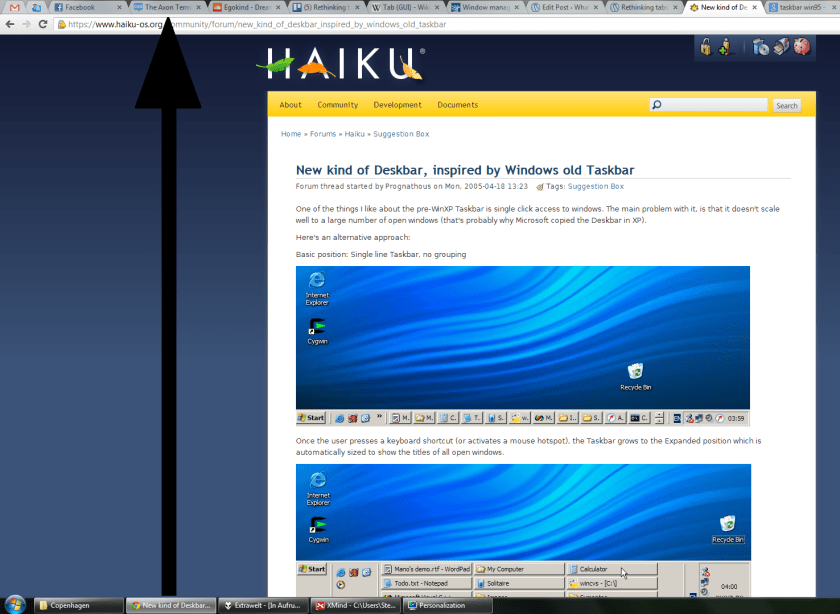

Remember that taskbar at the bottom of your screen (usually)? Remember what it used to do before it got all modernized and fancy new features were added? When you’d maximize all of your windows you basically had a tabbed interface with the tabs at the bottom. To exemplify I turned off some of the features in my current Windows 7 setup in order to make it resemble what it looked like in Windows 95, as also visualized in the website I have open.

All TDIs and MDIs are doing is moving the problem to a different location. They hide the clutter, only for it to reappear once the application has to manage many open documents itself. This can be useful when the open documents are related to each other, as is often the case in an integrated development environment (IDE), but this definitely isn’t the case for every application. Ironically web browsers are a prime example of applications which often manage unrelated open ‘documents’.

Imagine a desktop where every application decides for itself where to place the ‘close’ button or how to resize it’s window. The exact same thing is currently going on with document management.

Existing solutions

The problem should be handled by the window manager. Applications don’t need to re-implement the same functionality over and over again. This is in line with a principle in software development called ‘Don’t Repeat Yourself’ (DRY) which I hold in high regard. Additionally the same look and feel can be provided across applications. Some steps have been taken towards this end, of which I’ll discuss a few here.

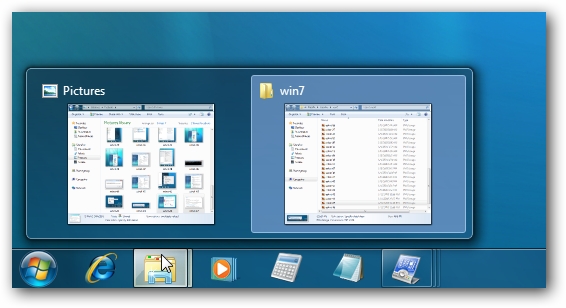

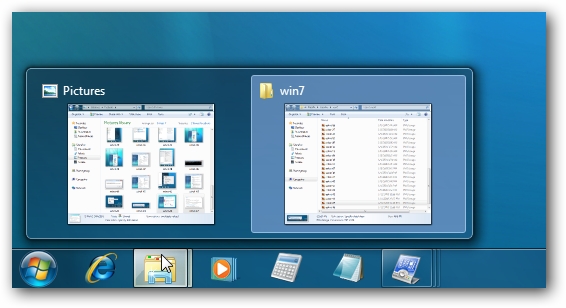

The windows 7 taskbar allows you to group windows together based on the application they belong to. In the screenshot below explorer windows are grouped together in one icon on the taskbar. Usually you know in which application you have a certain document open, so you can easily find it by performing just one extra step.

The problem I see here is the same as mentioned earlier: this simply moves the problem to a different location, and again, documents open in one application aren’t necessarily related to each other contextually. Additionally, even when the taskbar isn’t full, you still need to go through this extra step. (Note: you can configure to only group windows when the taskbar is full.)

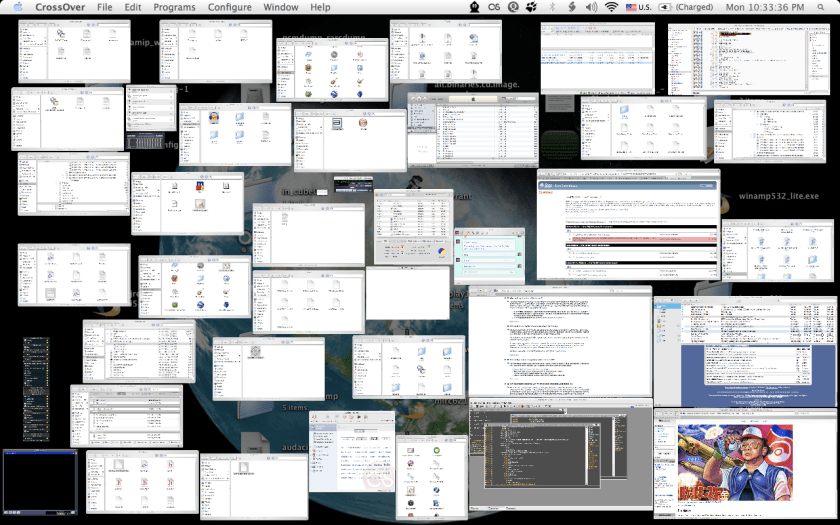

Some modern window managers allow you to group windows together manually.

Allowing you to manually organize windows at least gives you the flexibility to create your own context as you see fit, but unfortunately again these approaches don’t scale well.

… which of course begs the question, do they have to scale that well?

I prefer turning this question on its head and looking at it from an opportunistic angle.

Scaling window management

What if window management would be more scalable?

First, some food for thought. Yes, more questions …

- What is your main reason for closing a window?

- Why do you organize files?

- What is your main reason for not deleting a file?

- In what way do you organize windows?

- How does file management relate to window management?

A lot of effort goes into setting up the working environment for a particular activity. Wouldn’t it be nice if you were able to ‘store’ this setup and retrieve (or even reuse) it at a later time, just as you are able to store (and reuse) files?

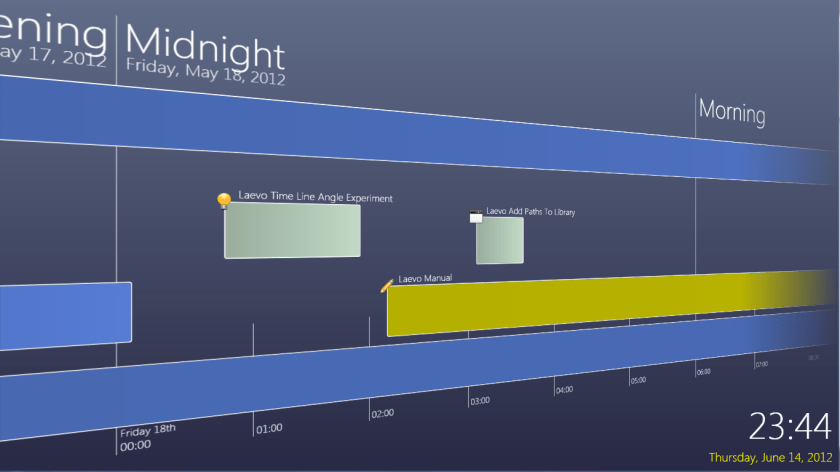

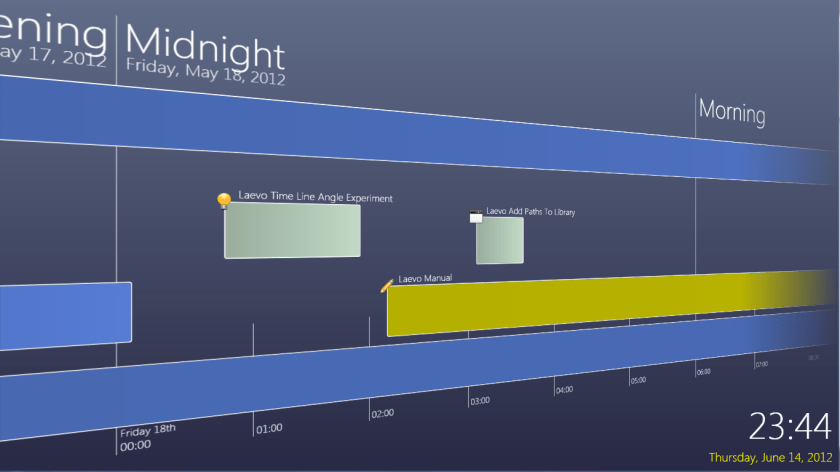

Perhaps the real problem isn’t how we access windows, but how we store them. In order to store and organize these different configurations of windows I proposed using a time line in my thesis.

This is inspired by Activity-Based Computing (ABC) which I wrote about previously, which states that activity should be the central computational unit instead of files and applications. In the context of window management activity can be seen as all the windows needed to achieve a certain goal. It’s up to you to decide what makes up a relevant context, organizing work as you like.

Taking this to the extreme: assume closing a window would be the same as deleting a file. Would you actually ever have to know about the underlying file system again? Window management and file management could become one and the same thing.